Setting Up Qdrant4 with AIWU AI Copilot: Vector Database Integration in WordPress

Integrating a vector database into your WordPress site can dramatically improve how your AI assistant understands and responds to user queries. One of the most powerful options for this is Qdrant4, an open-source, high-performance vector search engine that works seamlessly with tools like AIWU AI Copilot.

Unlike traditional keyword-based systems, Qdrant4 enables semantic retrieval, meaning your chatbot or content generator can understand intent and context not just exact matches. This makes it ideal for use cases such as smart search, knowledge-aware chatbots, and Retrieval-Augmented Generation (RAG) inside WordPress.

In this article, we’ll walk through the entire process of setting up Qdrant4 for use with AIWU AI Copilot. No coding required just clear steps, practical advice, and real-world configuration details you need to get started.

Let’s dive in.

Why Use Qdrant4 with AIWU AI Copilot?

Before we jump into setup, let’s briefly explain why integrating Qdrant4 matters.

Qdrant4 is known for:

- High performance on semantic similarity searches

- Support for both local and cloud deployment

- Simple API and low overhead compared to other vector databases

- Scalability without vendor lock-in

This makes it a strong choice for users who want more control over their embeddings than Pinecone offers, especially if they’re working within existing infrastructure or have specific hosting needs.

When used with AIWU AI Copilot, Qdrant4 becomes the backbone of your AI’s contextual understanding. It stores the numeric representations of your content called vectors and allows fast retrieval when users ask questions. The result? A smarter, more accurate chatbot that pulls from your own data instead of guessing at answers.

Now let’s move to the actual integration steps.

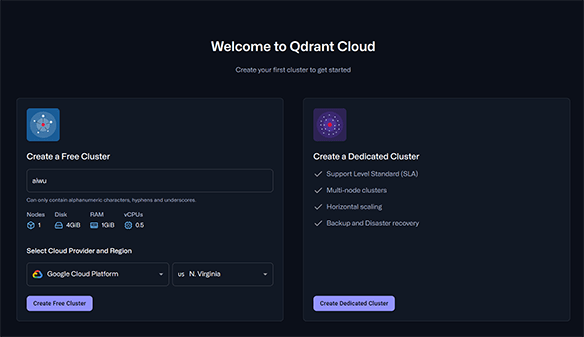

Step 1: Prepare Your Qdrant4 Instance

To connect Qdrant4 with AIWU AI Copilot, you first need a running instance of Qdrant4.

There are two ways to set this up:

- Local Installation – Best for testing or internal use

- Cloud Deployment – Recommended for production environments

For most WordPress users, a cloud-hosted version is preferable because it ensures uptime, accessibility, and scalability.

You can deploy Qdrant4 using:

- Managed services like Qdrant Cloud

- Self-hosting on a VPS or dedicated server

If you’re deploying locally, you might need to expose the service via a reverse proxy (like NGINX or Caddy) to make it accessible from your WordPress environment.

Once your Qdrant4 instance is online, verify it by sending a test request to the /collections endpoint. If you get a valid response, you’re ready to proceed.

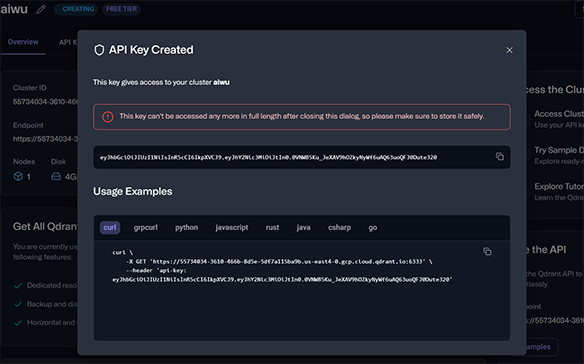

Step 2: Determine the Base URL and Authentication Settings

After confirming your Qdrant4 instance is running, note down two critical pieces of information:

- Base API URL: This is the address where your Qdrant4 server lives. It typically looks like

https://your-qdrant-instance.com:6333 - API Key: Retrieve your API key from the configuration file or dashboard

The base URL is essential because AIWU AI Copilot uses it to send embedding vectors and perform searches. Make sure the URL includes the correct port usually 6333 unless configured otherwise.

Once you have these values, keep them handy you’ll enter them directly into AIWU AI Copilot shortly.

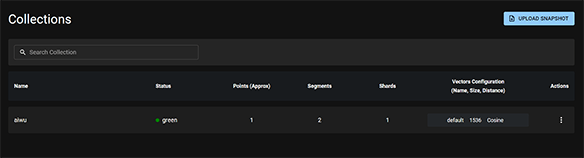

Step 3: Create a Collection in Qdrant4

A collection in Qdrant4 is essentially a container for your embeddings similar to a table in a relational database.

To create a new collection, go to your Qdrant4 dashboard or send a POST request to /collections with the following parameters:

collection_name: Choose something descriptive, like aiwu orfaq_vectorsvector_size: Set this to 1536 if you’re using OpenAI’stext-embedding-3-smallmodeldistance: Set this to Cosine Similarity the most common metric for comparing text embeddings

Here’s what a typical JSON body looks like:

{

"name": "aiwu",

"vectors": {

"size": 1536,

"distance": "Cosine"

}

}Once created, this collection will store all the vector representations of your dataset allowing AIWU AI Copilot to find relevant chunks of text when needed.

Alternatively, you can simply enter the desired collection name in the AIWU AI Copilot plugin settings, click the “Test Connection” button and this request will automatically create a Collection in Qdrant4.

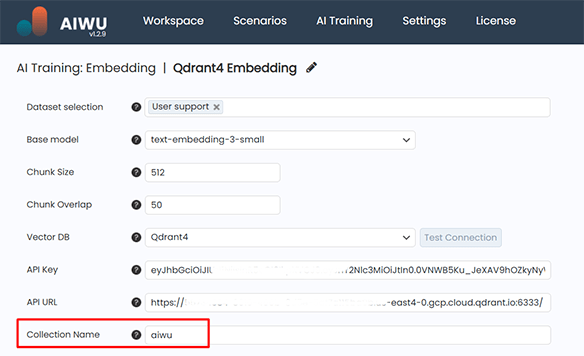

Step 4: Configure Qdrant4 Inside AIWU AI Copilot

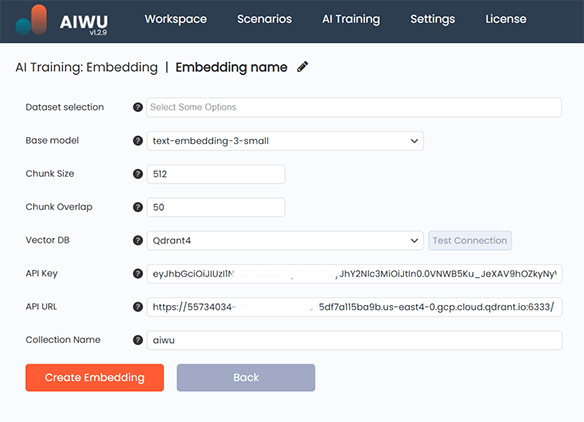

Now that your Qdrant4 instance and collection are ready, switch back to WordPress and navigate to the Embeddings tab under AIWU AI Copilot.

Click Create New to start configuring the connection.

You’ll be asked to provide:

- A name for your embedding collection

- One or more datasets of type Raw Text

- An OpenAI model (

text-embedding-3-smallis recommended) - A vector database here, choose Qdrant4

After selecting Qdrant4, the system will prompt you to fill in the following fields:

- API URL: Enter the full URL including protocol and port for example,

https://qdrant.mydomain.com:6333 - API Key (optional): If your Qdrant4 instance uses authentication, paste the key here

- Collection Name: Type in the name of the collection you created earlier e.g.,

aiwu

Once everything is filled out, click Test Connection to ensure AIWU can communicate with your Qdrant4 instance.

If the test passes, proceed to the next step. If not, double-check your Qdrant4 server status, firewall settings, and API URL formatting before retrying.

Step 5: Run the Embedding Job

With the Qdrant4 connection established, you’re now ready to generate and upload your embeddings.

Make sure you’ve selected a dataset of type Raw Text and status Ready. This dataset should reflect the domain you want your AI to understand whether it’s product descriptions, support documentation, or blog posts.

Next, define the chunk size and overlap:

- Max Length per Item: Default is 512 tokens, which works well for most applications

- Chunk Overlap: Default is 50 tokens, helping preserve context between segments

These values are suitable for general use. Only adjust them if you notice gaps in semantic retrieval later.

Once you’ve confirmed all settings, click Run Embedding.

Behind the scenes, AIWU AI Copilot will:

- Split your dataset into manageable blocks

- Send each block to the OpenAI embedding model

- Convert the text into numerical vectors

- Upload those vectors into your Qdrant4 collection

Depending on the size of your dataset and current OpenAI API speed, this may take several minutes to an hour.

During processing, the status will show as Processing. Once complete, it changes to Ready, and you can begin using the embedding in real-world features.

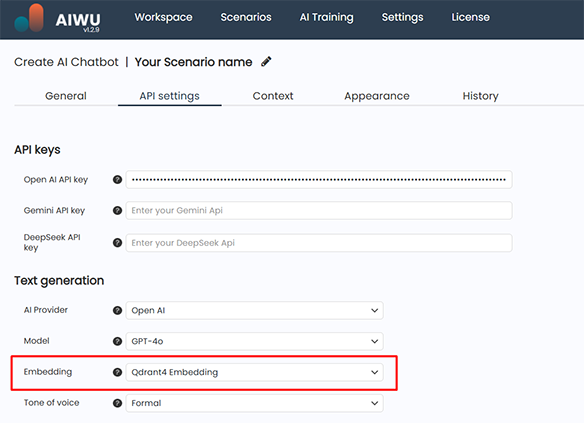

Step 6: Use the Qdrant4-Powered Chatbot in Production

Once the embedding is ready and verified, you can select it in any AIWU AI Copilot feature that supports external knowledge:

- Chatbots: Teach your assistant to answer questions based on your own content

- Content generation: Enhance article writing with context pulled from your knowledge base

Behind the scenes, AIWU AI Copilot uses Retrieval-Augmented Generation (RAG):

- When a user asks a question, the system generates a vector representation of the query

- It searches your Qdrant4 collection for the most semantically similar entries

- Those entries are injected into the prompt as context

- The final request is sent to the language model

This ensures your AI doesn’t rely solely on pre-training it uses your own content to deliver accurate, timely responses.

Common Issues and How to Fix Them

Even with careful setup, some issues may arise when connecting Qdrant4, and I will help you sort them out.

Issue 1: Connection Fails

If the Test Connection button returns an error, double-check:

- Whether your Qdrant4 instance is reachable from the internet

- Whether the port (usually 6333) is open and properly routed

- Whether there’s a firewall or CORS policy blocking access

Sometimes, a simple restart of the Qdrant4 service fixes connectivity problems. Other times, the issue lies in incorrect API URLs or missing collections.

Issue 2: Embedding Upload Fails

If the system reports an error during the embedding process, the problem likely comes from:

- Incorrect vector dimension (ensure it matches your OpenAI model 1536 for

text-embedding-3-small) - Missing or invalid collection in Qdrant4

- Network instability during transfer

Always validate your collection structure in Qdrant4 before uploading. If necessary, delete and recreate the collection with the right parameters.

Issue 3: Poor Retrieval Quality

If your chatbot isn’t pulling the most relevant answers from the Qdrant4 index, it could be due to:

- Inconsistent or noisy source data

- Too large or too small chunk sizes

- Low-quality embeddings from poorly structured text

Fix this by reviewing your dataset and refining the training input. Clean, focused content yields better semantic retrieval.

Qdrant4 Is a Strong Choice for Custom AI

Setting up Qdrant4 with AIWU AI Copilot gives you fine-grained control over how your AI handles context. Unlike keyword-based systems, this integration lets your chatbot understand meaning not just words.

While Pinecone offers simplicity, Qdrant4 provides flexibility. It’s particularly useful if you already host infrastructure internally or prefer open-source solutions with minimal dependencies.

And once connected, it works quietly in the background giving your AI the ability to pull from your own knowledge, every time it generates a response.

If you’re serious about building smarter AI experiences in WordPress, Qdrant4 is a solid foundation and with AIWU AI Copilot, it’s easier than ever to integrate into your workflow.